Where We’re Going, We Don’t Need Maps (But Maybe a Little Help from AI)

Imagry CEO Eran Ofir on the limits of HD maps, the promise of real-time perception, and the messy middle ground between them

The debate between mapless and HD-mapped autonomy sits at the heart of today’s self-driving conversation. HD maps offer unmatched precision and redundancy, turning the world into a navigable database — but they also demand enormous upkeep and restrict where vehicles can operate. Mapless systems, by contrast, interpret the road entirely through real-time perception, trading reliability and predictability for adaptability and scale. Each approach has its champions, and each carries trade-offs that go beyond engineering — shaping everything from business models to regulatory strategy.

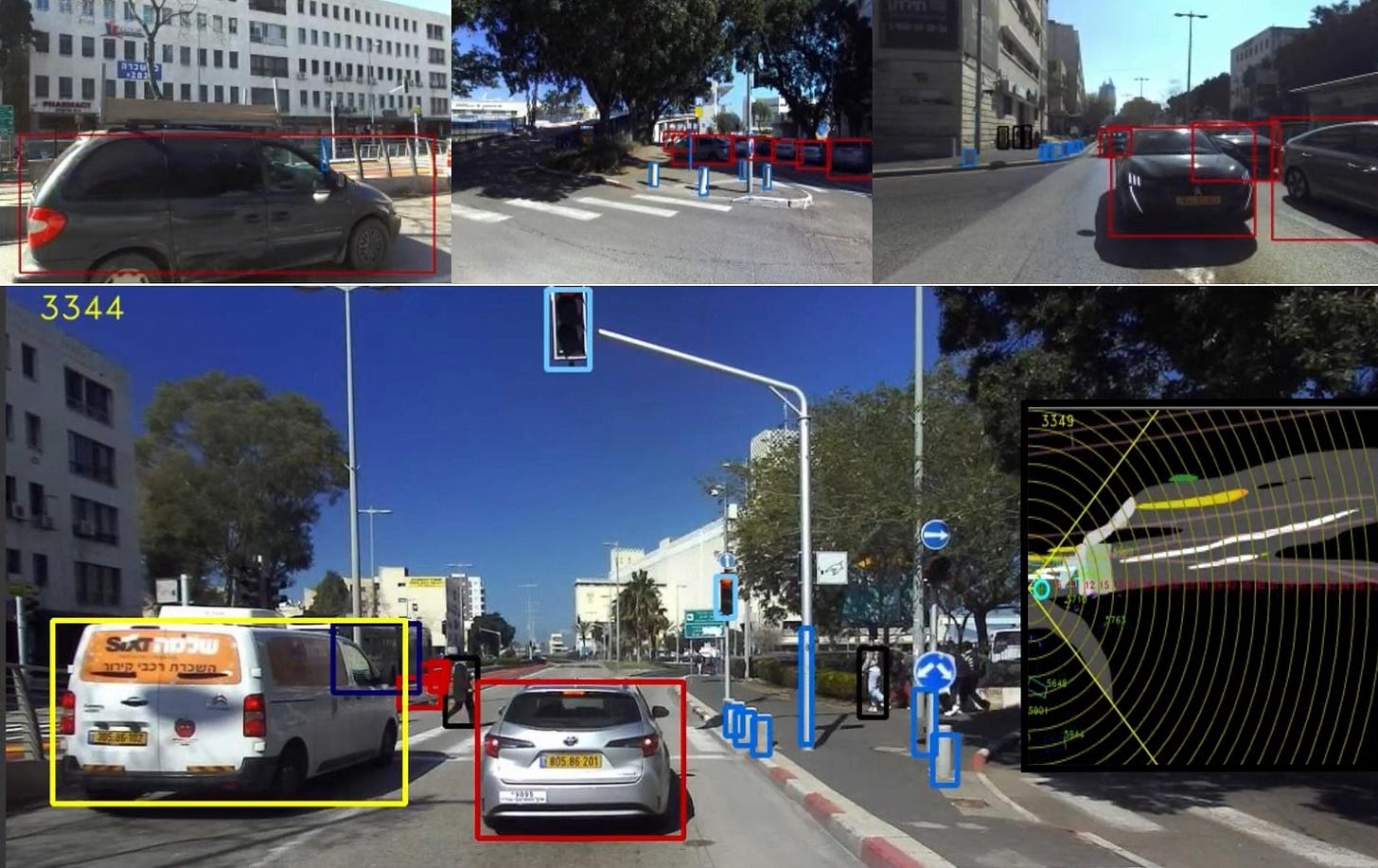

Waymo has famously taken an HD maps approach, while Tesla has been an even more vocal proponent of going mapless. Other companies have taken the no maps approach as well: Imagry, founded in Haifa and led by CEO Eran Ofir, is one of the companies betting that a lighter, perception-driven stack can make autonomy more deployable and affordable. The company’s camera-based system has been tested on buses and shuttles in Israel, Japan, Germany, and the U.S., with a focus on constrained but commercially meaningful routes. As the industry moves past the hype cycle of robotaxi demos and into the harder work of sustainable deployment, Imagry’s experience offers a useful lens into what autonomy looks like when it has to perform outside the lab — whether that future is mapped to the centimeter or not. We checked in with Eran to get his latest thoughts on the mapping debate.

Ottomate News: To start, give us the elevator pitch for Imagry.

Eran Ofir: Imagry delivers vision-based autonomous driving solutions without HD maps, lidar, or the cloud. We are pioneering Generative Autonomy: real-time intelligence that drives machines in the physical world. Our AI-centric platform, unlike rule-based systems, learns from experience and adapts to any road, vehicle, or condition, just like a human, only better.

Already deployed across transit and fleet vehicles, Imagry is solving the scalability challenge for both public transportation and passenger cars. Our real-time, hardware-agnostic architecture integrates seamlessly with existing platforms, giving automakers and transit operators a cost-effective, production-ready autonomy solution.

The next leap in GenAI isn’t a chatbot. It’s a driver. Today we drive buses and cars. Tomorrow, we power every intelligent machine that moves.

Most AV stacks still lean on HD maps, even if they talk about “reducing” dependence. What made you confident enough to go fully mapless, and where do you see that choice giving Imagry an enduring advantage vs. players like Mobileye or Wayve who also push lower-map approaches?

Imagry has always believed in the philosophical approach that an AI autonomous driving system should completely mimic the way people think and act. We humans don’t drive with HD maps, since we see the road and understand it. It has always been our premise (since 2018) that a full, uncompromised AI solution is the only practical approach to autonomous driving. We knew this approach would eventually be viable and ultimately prevail (once edge computing in the vehicle would be capable enough, which happened approximately two years ago). Currently only Tesla, Imagry, and Wayve have such capability. This provides us with a huge advantage in project bids, as it means that our vehicles/buses can drive everywhere, and not just in a small and limited geo-fenced area dictated by the HD-map being used by the less advanced players in the market.

You’ve previously said big parts of the AV industry spent billions on flashy demos while you focused on making autonomy actually work in daily service. What did you do differently in your first real deployments—like the autonomous buses in Israel—and what surprised you most when theory met messy real-world operations?

Our approach wasn’t to do demos that would look nice but be built on weak technology. Rather, from the get-go we built the foundation of an AI system that learns by imitation. Also, we didn’t take the unsupervised approach, which is quick and dirty, but the supervised approach to AI training, which is much slower but serves as a very solid foundation for training the neural networks.

When we got on the road (in the U.S., Germany, Japan, Israel…), we learned that lab simulations have little to do with real autonomous driving on public, mixed traffic roads. We learned that keeping latency to a minimum is of paramount importance, and every small change there leads to significant different behavior. We learned that urban driving (which is our forte) is much more complex than highway driving, and we learned how difficult and sophisticated a system should be in order to be able to drive on both sides of the road (most players in the world drive only on right-hand-side or left-hand-side, but not both with the same system, as Imagry does).

Imagry is camera-only and hardware-agnostic, which may be attractive on cost—but safety regulators and OEMs love redundancy. How do you make the safety case for a vision-only system, and what are the long tail risks you worry about?

It has been proven time and again that the need for lidar and radar is not real and was only pushed by their vendors (all of whom have lost >90% of their value since coming on the scene). In the AI era, a camera-based system that sees 100m-300m and 360 degrees doesn’t need lidar or radar that anyhow don’t understand what they see and can’t understand colors and signs. We humans are not equipped with lidar and radars and yet regulators trust us to drive well! Also, given that the response time of our system is at least 3 times faster than a human, it is safer than a human driver. Tesla, XPeng, Volvo, and Imagry are already using vision-only systems, and I expect that by the end of the decade, most OEMs will adopt the vision-only approach. Cameras are continually improving. Now we also drive in dark nights using thermal cameras. We work closely with regulators globally, and they understand now that the old adage “hardware will solve it all” has become extinct; now it’s AI software that rules.

Join AV and AI leaders at the Urban Autonomy Summit, an invite-only gathering, Jan. 28th in San Francisco. Apply to attend now.

Who do you see as your main customers over the next five years: transit operators, Tier-1s, or the OEMs themselves? And what do partnerships look like in terms of integration, revenue models, data sharing and the like?

Our target customers for our autonomous buses are public transportation operators (PTOs) and municipalities, and fleets (for our roboshuttle offering). Yes, we do work with OEMs, but as partners for providing the needed pre-integrated vehicles to our customers.

You’re live or testing in multiple regions—from Israel to the U.S., Germany and Japan. What are the hidden bottlenecks to scaling from a handful of routes and vehicles to thousands—are they primarily technical, regulatory, operational, or commercial?

The main bottleneck is the regulation, specifically, the length of time required to obtain an L4 driverless permit in a country. We already see cases where the public operator is getting closer to being able to pull out the drivers, and they are preparing budgets to purchase dozens of autonomous buses for their fleets during 2026 and early 2027. It’s only a matter of time. The technology is there, but much still needs to happen in the market with respect to the integration of multiple systems (e.g., connecting to ticketing, billing, control room, etc.), in order for the autonomous vehicle to be ready for full scale in prime time. I would give it around five years until the full solution becomes standard.

Imagry talks about bio-inspired, imitation-learning-based driving. As models get larger and more “world-model-like,” how does your stack evolve—do you see a path to a single generalized “AI driver,” or will vehicular autonomy always be highly domain-specific?